Yesterday I had the great pleasure in being part of the global WiDS2017 event show casing women in all aspects of data science. The main conference was held at Stanford but over 75 locations world wide had rebroadcasts and local events, of which Reading was one. In addition to spending a great evening with some amazing women, I was asked to speak in the career panel on my experiences and overall journey. Continue reading WiDS2017: Women in Data Science Reading

Yesterday I had the great pleasure in being part of the global WiDS2017 event show casing women in all aspects of data science. The main conference was held at Stanford but over 75 locations world wide had rebroadcasts and local events, of which Reading was one. In addition to spending a great evening with some amazing women, I was asked to speak in the career panel on my experiences and overall journey. Continue reading WiDS2017: Women in Data Science Reading

Category: Technology

Learning Fortran – a blast from the past

Over the weekend, I was clearing out some old paperwork and I found the notes from one of the assessed practical sessions at University. Although I was studying biochemistry, an understanding of basic programming was essential, with many extra optional uncredited courses available. It was a simple chemical reactions task and we could use BASIC or Fortran to code the solution. I’d been coding in BASIC since I was a child1 so decided to go for the Fortran option as what’s the point in doing something easy…. Continue reading Learning Fortran – a blast from the past

Anything you can do AI can do better (?): Playing games at a new level

Learning to play games has been a great test for AI. Being able to generalise from relatively simple rules to find optimal solutions shows a form of intelligence that we humans always hoped would be impossible. Back in 1997, when IBMs Deep Blue beat Gary Kasparov in chess1 we saw that machines were capable of more than brute force solutions to problems. 20 years later2 and not only has AI mastered Go with Google’s DeepMind winning 4-1 against the world’s best player and IBM’s Watson has mastered Jeopardy, there have also been some great examples of game play with many of the games I grew up playing: Tetris, PacMan3, Space Invaders and other Atari games. I am yet to see any AI complete Repton 2. Continue reading Anything you can do AI can do better (?): Playing games at a new level

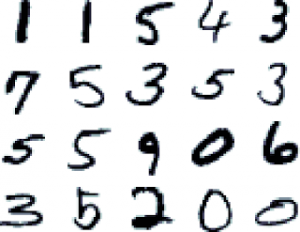

Artificial images: seeing is no longer believing

“Pics or it didn’t happen” – it’s a common request when telling a tale that might be considered exaggerated. Usually, supplying a picture or video of the event is enough to convince your audience that you’re telling the truth. However, we’ve been living in an age of Photoshop for a while and it has (or really should!!!) become habit to check Snopes and other sites before believing even simple images1 – they even have a tag for debunked images due to photoshopping. Continue reading Artificial images: seeing is no longer believing

How to build a human – review

Ahead of season 2 of Channel 4’s Humans, they screened a special showing how a synthetic human could be produced. If you missed the show and are in the UK, you can watch again on 4OD.

Presented by Humans actress Gemma Chan, the show combined realistic prosthetic generation with AI to create a synth, but also dug a little deeper into the technology, showing how pervasive AI is in the western world.

There was a great scene with Prof Noel Sharkey and the self driving car where they attempted a bend, but human instinct took over: “It nearly took us off the road!” “Shit, yes!”. This reinforced the delegation of what could be life or death decisions – how can a car have moralistic decisions, or should they even be allowed to? Continue reading How to build a human – review

ReWork Deep Learning London 2016 Day 1 Morning

In September 2016, the ReWork team organised another deep learning conference in London. This is the third of their conferences I have attended and each time they continue to be a fantastic cross section of academia, enterprise research and start-ups. As usual, I took a large amount of notes on both days and I’ll be putting these up as separate posts, this one covers the morning of day 1. For reference, the notes from previous events can be found here: Boston 2015, Boston 2016.

Continue reading ReWork Deep Learning London 2016 Day 1 Morning

Amazon Echo Dot (second generation): Review

When I attended the ReWork Deep Learning conference in Boston in May 2016, one of the most interesting talks was about the Echo and the Alexa personal assistant from Amazon. As someone whose day job is AI, it seemed only right that I surround myself by as much as possible from other companies. This week, after it being on back order for a while, it finally arrived. At £50, the Echo Dot is a reasonable price, with the only negative I was aware of before ordering being that the sound quality “wasn’t great” from a reviewer. Continue reading Amazon Echo Dot (second generation): Review

Formula AI – driverless racing

We’re all starting to get a bit blasé about self driving cars now. They were a novelty when they first came out, but even if the vast majority of us have never seen one, let alone been in one, we know they’re there and they work1 and that they are getting better with each iteration (which is phenomenally fast). But after watching the formula 1 racing, it’s a big step from a 30mph trundle around a city to over 200mph racing around a track with other cars. Or is it? Continue reading Formula AI – driverless racing

Literate programming – effect on performance

After my introductory post on Literate Programming, it occurred to me that while the concept of being able to create documentation that includes variables from the code being run is amazing, this will obviously have some impact on performance. At best, this would be the resource required to compile the document as if it was static, while the “at worst” scenario is conceptually unbounded. Somewhere along the way, pweave is adding extra code to pass the variables back and forth between the python and the

, how and when it does this could have implications that you wouldn’t see in a simple example but could be catastrophic when running the kind of neural nets that my department are putting together. So, being a scientist, I decided to run a few experiments….1 Continue reading Literate programming – effect on performance

Using Literate Programming in Research

Over my career in IT there have been a lot of changes in documentation practises, from the heavy detailed design up front to lean1 and now the adoption of literate programming, particularly in research (and somewhat contained to it because of the reliance on as a markup language2). While there are plenty of getting started guides out there, this post is primarily about why I’m adopting it for my new Science and Innovations department and the benefits that literate programming can give. Continue reading Using Literate Programming in Research