I’m currently building a team for my new secret project and far more of my time than I’d like is spent with the recruitment process. However, every minute of that time is essential and we’re at a point where none of it can be handed off to an agency even if I wanted to1. So getting the recruitment process right is essential.

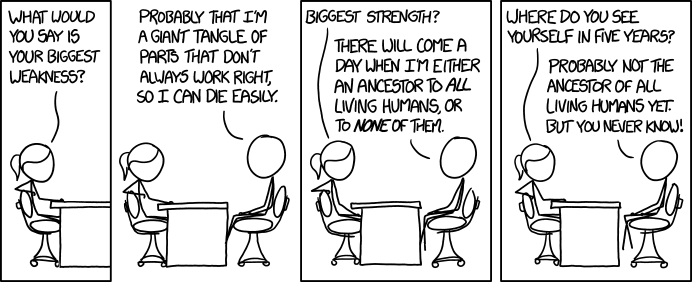

One of the basic principles of management in any industry is that if you set metrics for your team, they will adapt to maximise those results: set a minimum number of bugs to be resolved and you’ll find the easy ones get picked off, set an average number of features and you’ll find everything held together with string, set too many metrics to cover all bases and you’ll end up with none of them hit and a demoralised (or non-existent) team2. The same is true of recruitment – you will end up hiring people who pass whatever recruitment tasks you set, not necessarily the type of person the company needs. While this may appear obvious, think back to the last interview you were at, either as the interviewer or interviewee – how much relation did the process really have to the role?

When I started recruiting for my new team, I knew I had neither the time nor resources to make mistakes. I had to get this right first time. Continue reading From the interviewer’s side of the table