September is always a busy month in London for AI, but one of the events I always prioritise is ReWork – they manage to pack a lot into two days and I always come away inspired. I was live-tweeting the event, but also made quite a few notes, which I’ve made a bit more verbose below. This is part one of at least three parts and I’ll add links between the posts as I finish them.

After a few problems with the trains I arrived right at the end of Fabrizio Silvesetri’s talk on how Facebook use data – very interesting question on avoiding self-referential updates to the algorithm although I lacked the context for the answer.

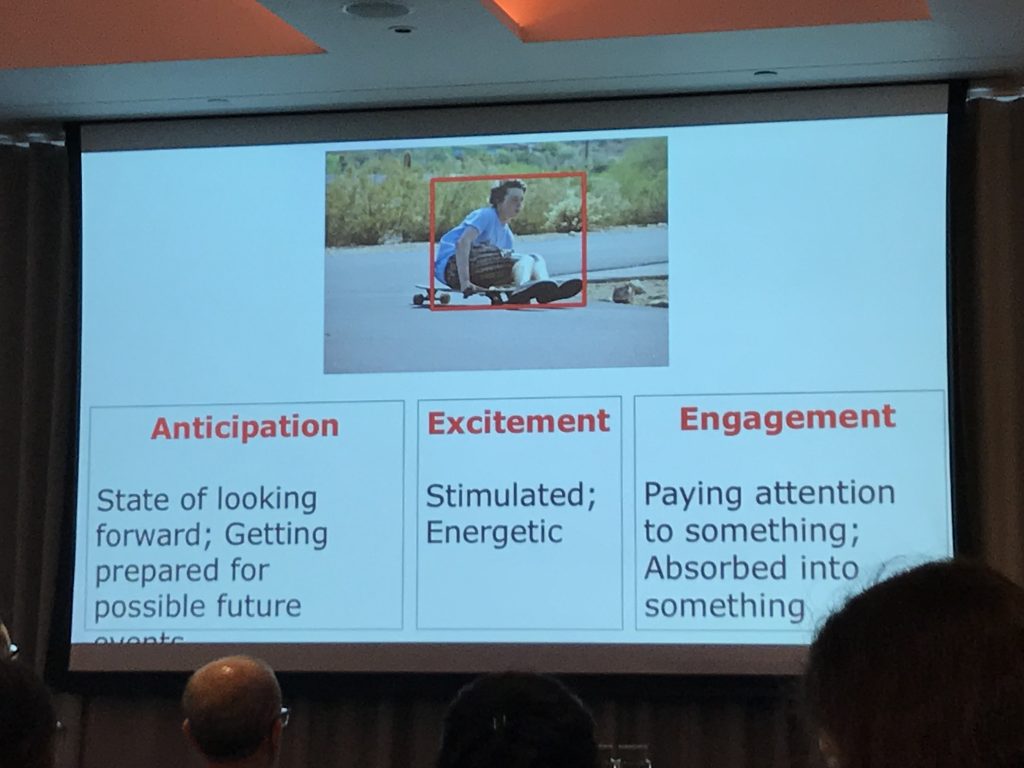

So the first full talk of the day for me was Agata Lapedriza from University Oberta de Catalunya and MIT who spoke about emotion in images. Generally in computer vision we look solely at the face. While this can give some good results, it can get it badly wrong when the emotion is difficult. She showed a picture of a face and how the same face in different contexts could show disgust, anger, fear or contempt. What was going on around the face is important for us humans to get the correct emotion. Similarly, facial expressions that look like emotions can be misinterpreted out of context. A second image of a child looking surprised turned out to be the boy blowing out candles on his birthday cake. We are good at interpreting what is going on in scenes, or at least we think we are. Agata showed a low resolution video of what appeared to be a man in an office working on a computer. We’d filled in the details by upsampling in our minds, only to discover that the actual video showed the man talking into a shoe, using a stapler as a mouse while wearing drinks cans as earphones and staring at a rubbish bin and not a monitor!

The first problem was training data. A Google search gave them faces but with limited labels so this was crowdsourced and averaged to get a full set of emotional descriptions. To put emotions in context they created a system with two parallel convolutional models: one for the scene and the other for the faces. By combining the two they got much better results than looking at the faces or scenes alone. Labelled data was sparse for training (approximately 20 thousand examples) so they used pretrained networks (image net and scenes). Even with this very complicated task they got a reasonable answer in about half of cases.

The biggest potential for this is to build empathy recognition into the machines that will inevitably surround us as we expand our technology. There was a great question on the data annotation and how variable humans are in this task. Agata suggested that an artificial system should match the average of human distribution in understanding emotion. She also noted that it’s difficult to manage the balance between what is seen and the context. More experiments to come in this area I think! You can see a demo at http://sunai.uoc.edu/emotic/

Next up was someone I’ve heard speak before and who always has some interesting work to present; Raia Hadsell from Deepmind discussing deep reinforcement learning. Games really lend themselves well to reinforcement learning as they are microcosms with large amounts of detail and in build success metrics. Raia was particularly interested in navigation tasks and whether reinforcement learning could solve this problem.

Starting with a simulated maze, could an agent learn how to get to a goal from a random spawn point on the map. In two dimension with a top down view and full visibility this is an easy problem. In a three dimensional (simulated) maze where you can only see the view in front of you it is a much harder problem as you have to build the map. They had success with their technique which included a secondary LSTM component for longer term memory and hypothesised whether this technique could work in the real world.

Using Google Maps and Street View, they generated “StreetLearn” as a reinforcement training environment. Using the street view images cropped to 84 x 84 pixels they created a map of several key cities (London, Paris, New York) and gave the system a courier task. Navigate to a goal and then be given a further goal that requires more navigation. They tried the goal description with both lat/long coordinates and also with distances and direction from specific landmarks. Similar results were observed but both data options. They also applied a learning curriculum where the targets were close to the spawn point and then moved further away as the system learned.

There was a beautiful video demonstration showing this in action in both London and Paris, although traffic issues were not mapped. Raia then went on to discuss transference learning where the system was trained on subsections of New York and then the architecture was frozen and only the LSTM was retrained for the new area. This showed great results, with a lot of the task awareness encoded and transferable, leaving only the map to be a newly learned item.

I always love hearing space talks, it’s an industry I would have loved to work in. James Parr of the Nasa FDL lab gave a great talk on how AI is essential as a keystone capacity across the board for the FDL. There are too many data sets and too many problems for humans alone. The TESS mission is searching for exoplanets across about 85% of the sky and produces enough data to fill up the Sears Tower (although he didn’t specify in what format 🙂 ). Analysing this data used to be done manually and is now changing to AI. Although there was little depth to the talk in terms of science, he gave a great overview of the many projects where AI is being used: moon mapping, identifying meteorites in a debris field and asteroid shape modelling. A human takes one month to model asteroid shape and NASA has a huge backlog. Other applications include assistance in disaster response, combining high detail pre-existing imagery with lower resolution satellite data that can see through the cloud cover in e.g. hurricane situations. If you want to know more about what they do check out nasa.ai

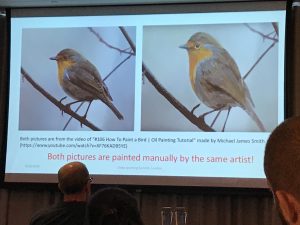

Qiang Huang from the University of Surrey followed with a fascinating talk on artificial image generation. Currently, we use Generative Adversarial Networks to create images from limited inputs and there’s been moderate success with this. Images can be described in three ways: shape, which is very distinctive and helps localise objects within the image, colour, which can be further split into hue, intensity and value, and finally texture, the arrangement and frequency of variation. So how do humans draw colour pictures? He showed us a slide of two images of a bird and asked us to pick which of them was painted by a human. We all fell for it and were surprised when he said that both images had. Artists tend to draw the contours of the image and then add detail. Could this approach improve artificial image generation? They created a 2-GAN approach – the first to create outlines of birds and the second to use these outlines for images. While the results he showed were impressive, there was still a way to go for realistic images. I wonder how many other AI tasks could be improved by breaking them down into the way humans solve the problem…

Tracking moving objects is an old computer vision task and something that can quickly become challenging. Adam Kosiorek spoke about visual attention methods. With soft attention, the whole image is processed, even if it’s not relevant, which can be wasteful. With spatial transformers you can extract a glimpse and this can be more efficient and allow transformations. He demonstrated single object tracking just from initialising a box around a target object and then discussed a new approach: hierarchical attentive recurrent tracking. Looking at the object not the image gives higher efficiency and using a glimpse (soft attention) to find the object and mask it. The object is then analysed in feature space and its movements predicted. He showed a demo of this system for tracking single cars and it worked well. Can we track multiple objects at once, without supervision and generate moving objects using this technique? Yes but it needs some supervision. He also showed a different model for multiple objects: Sequention attend infer repeat (SQAIR) which was an unsupervised generative model with strong object prior to avoid further supervision. SQAIR has more consistency and common intuition: if it moves together then it belongs together. Adam showed toy results using animated MNIST and also CCTV data with a static background and it shows promise but is currently computationally inefficient.

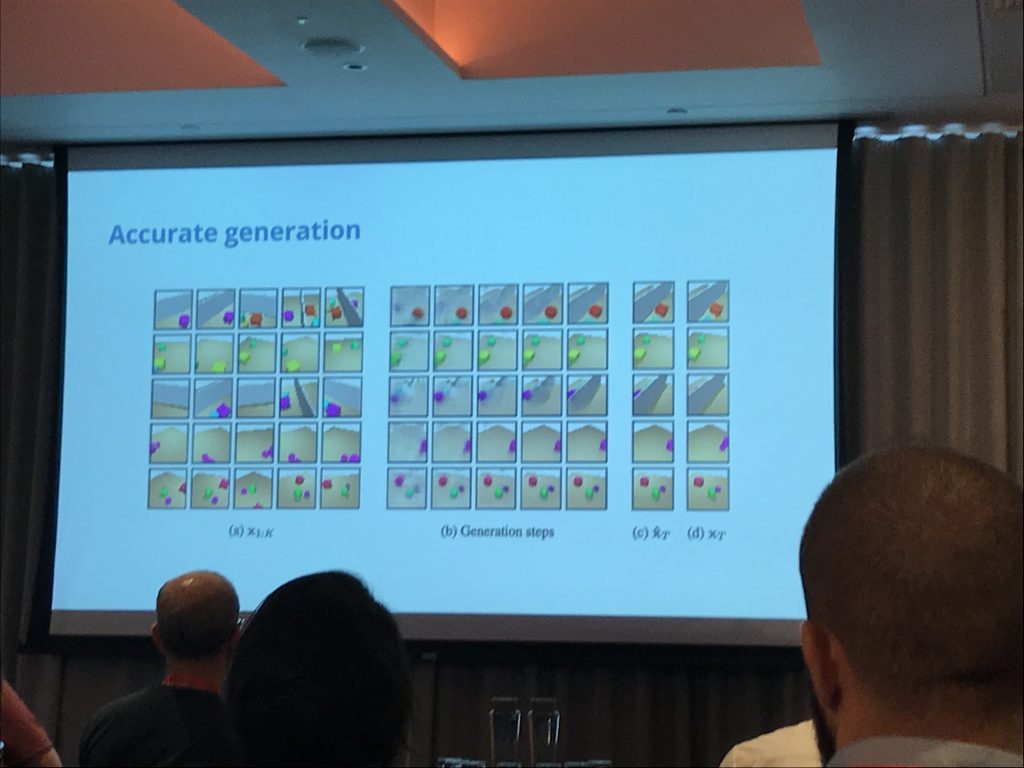

Another Deepmind speaker was up next, Ali Eslami on scene representation and rendering. Usually this is done with a large data set and current models work well, but this is not representative of how we learn. Similarly the model is only as strong as the data set and it will not go beyond those boundaries. They sought to ask whether computers could learn representation so that the scene could be imagines from any view point. In essence, yes. Ali showed a lovely set of examples showed both the rendered scene and also the intermediate steps. What was fascinating was when the scene was rotated so the objects were behind a wall the network placed the objects first and then occluded them. The best results shown were with 5 training images, but even with one image good results could be seen. There was some uncertainty but it was bounded within reality (i.e. no dragons hiding behind walls!).

They then asked the question could they take this same model and apply to other tasks? With a 3D tetris problem, it worked well. Again the more images that were given the lower the uncertainty. A further question is: Is this useful? When applied to training a robot arm they achieved better results than direct training alone. The same network could also be used to predict motion in time when trained on snooker balls, implicitly learning the dynamics and physics of the world. This seemed to far outperform variational autoencoder techniques.

One of the things I love about the reWork conferences is that they will have very theory based sessions and then throw in more AI in application talks. The second such talk of the day (after the FDL talk by James Parr) was Angel Serrano from Santander. He started off discussing different ways of organising teams within businesses and why they had gone for a central data science team (hub) with further data scientists (spokes) placed in specific teams to get the best of both worlds in terms of deep understanding of business functions and collaboration. He also noted that data management is a big issue for any company. A data lake created four years ago may not be fit for purpose now in terms of usability by current AI technologies or support for GDPR and this needs regular review and change if necessary. In Santander, the models are trained outside of the data lake for performance and this is a specific consideration. Angel then went on to discuss different uses of AI within Santander, including uses that are not obvious. Bank cards have a 6 year lifespan before they begin to degrade. If they have not been issued within 2 years of manufacture then they have to be destroyed. With over 200 types of card and management with minimum orders, predicting stock requirements is an easy way that AI has added value within the bank without the need for the regulatory overhead that modelling financial information carries.

Lots of ideas and stimulating discussion in the breaks and many more great speakers to summarise.

Part 2 is available here.