There’s a trend in job descriptions that the company may be looking for “Data Science Unicorns”, “Python Ninjas”, “Rockstar developers”, or more recently the dreaded “10x developer”. When companies ask this, it either means that they’re not sure what they need but they want someone who can do the work of a team or that they are deliberately targeting people who describe themselves in this way. A couple of years ago this got silly with “Rockstar” to the point that many less reputable recruitment agencies were over using the term, inspiring this tweet:

Many of us in the community saw this and smiled. One man went further. Dylan Beattie created Rockstar and it has a community of enthusiasts who are supporting the language with interpreters and transpilers.

While on lockdown I’ve been watching a lot of recordings from conferences earlier in the year that I didn’t have time to attend. One of these was NDC London, where Dylan was giving the closing session on the Art of Code. It’s well worth an hour of your time and he introduces Rockstar through the ubiquitous FizzBuzz coding challenge.

After watching this I asked the question to myself, could I write a (simple) neuron based machine learning application in Rockstar and call myself a “Rockstar Neural Network” developer?

While Rockstar is Turing complete, there are no frills so you need to know what you are doing. Try writing a simple ML application in pure python without any libraries (here’s a good resource for that). Now take away everything that’s object oriented or is a function call. It’s tricky but a fun exercise to make sure you really understand what is happening under the hood1.

Get Rockstar

First thing you need to do is to get Rockstar and the interpreter of choice. This is clonable from GitHub at https://www.github.com/RockstarLang/Rockstar. Grab a copy and your favourite interpreter/transpiler and check that FizzBuzz works for you. You can also code directly onto the website if that’s your thing. Because I’m me, I forked the code and created a dockerised version and upgraded it to python 32. Sadly the Python transpiler was not feature complete for all the poetic features of Rockstar, so I decided to use a sandboxed version of the website and associated tools with the satriani interpreter in javascript. Naturally I combined this with the vim syntax highlighting 😉

Pseudocode

I was taught to code by solving the problems in comments first and then filling in the code. I still do this. I wanted to start with something that was simple and worked. There’s a great tutorial on Medium3 of a simple 3 input neuron that is trained to return 1 or 0 based on the combination of inputs. It’s implemented in 9 lines of Python and then far more neatly and readable later in the article. I took this as my starting point. The data was limited, which was a requirement as Rockstar can only read from stdin so would need to be embedded in the application.

The first thing I did was turn the code into comments and start defining my variables. Numbers in Rockstar are based on word count, with successive words being different digits. There’s no direct way of initialising a negative number so you have to start with a positive version and then take it away from zero. Arrays can be declared with positions, and to make a 2D array, I created individual 1D arrays and then assigned them to the appropriate position in the parent array.

I then ran this through the satriani interpreter in my docker container and checked that all the variables were the numbers I expected, which they were.

Functions

So onto the functions. I needed to write:

- a random number function to initialise the weights

- an exponential function to calculate

- a sigmoid function

- a sigmoid derivative function

- a matrix dot product

- a matrix transpose

- training function to loop through the data, infer and make adjustments to the weights

This seemed straight forward enough and I tackled them in this order due to the cross dependences within the functions.

Random number generation in computers is based on a seed and an algorithm that manipulates the seed in a pseudo-random way. There are a lot of them. Out of habit I picked a Linear congruential generator. Where the recurrence relation is defined by . The Wikipedia page gave commonly used values of a, c, and m. I started writing this in Rockstar only to realise that there was no modulus function. While I could have written one for these large numbers, this seemed like an excessive use of processing for what was only going to be used to initialise three input weights. So I cheated 🙂 I only cared about a value between 0 and 1 so I made sure my seed was in that limit, did a large multiplication and an addition and then took away the integer part. I multiplied this by 2 to give me a random number in the range 0 to 2 and then subtracted 1 to get the desired -1 to 1 range. This gave me a verse like this that I could call three times.

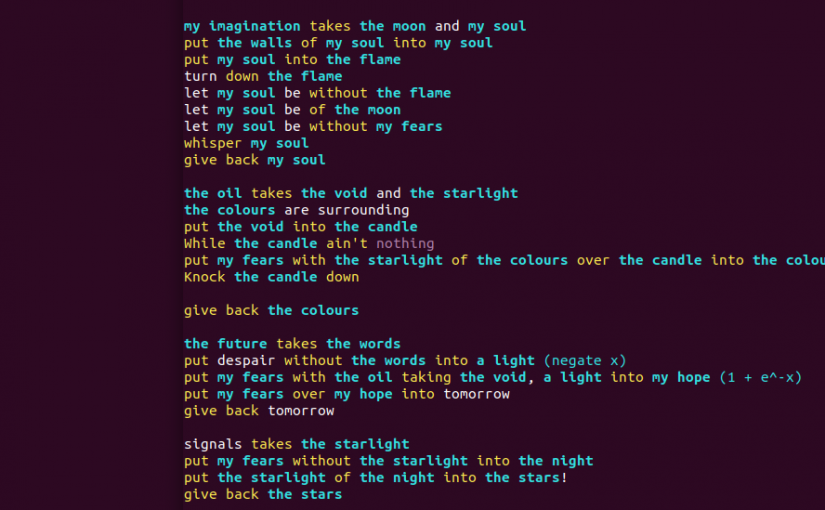

my imagination takes the moon and my soul

put the walls of my soul into my soul

put my soul into the flame

turn down the flame

let my soul be without the flame

let my soul be of the moon

let my soul be without my fears

whisper my soul

give back my soulThe next function was , which is a little trickier. If you google on how to implement e in code you’ll get about a million helpful articles on how to use the `exp()` function. Fortunately, armed with an understanding of Taylor Series expansion from my OU degree, I know you can calculate

:

Which can be simplified to:

In python this is:

sum = 1.0

for i in range(n, 0, -1):

sum = 1 + x * sum / iWhich in Rockstar becomes this beauty.

the oil takes the void and the starlight

the colours are surrounding

put the void into the candle

While the candle ain't nothing

put my fears with the starlight of the colours over the candle into the colours

Knock the candle down

give back the coloursThe sigmoid then became:

the future takes the words

put despair without the words into a light (negate x)

put my fears with the oil taking the void, a light into my hope (1 + e^-x)

put my fears over my hope into tomorrow

give back tomorrowWith the sigmoid deriavative being:

signals takes the starlight

put my fears without the starlight into the night

put the starlight of the night into the stars!

give back the starsMatrix madness

That just left the matrices. I spent a good day on these mainly because Rockstar wasn’t passing variables as I expected. There was no direct way of getting the length of an array. If you called an array as a scalar it would return the length, but there was no `typeof` checking. Determining dynamically if I had a 4×3 or 1×4 or any other matrix was difficult. I didn’t want to extend Rockstar as I’d end up with non-portable code, although that could be an option for when I don’t have an exam in a few days! Furthermore, after some testing, I discovered that passing a 2D array to a function ended up with the length of the array passed instead of the array. one dimensional arrays seemed to pass correctly. Conscious of time, rather than continue down this path I decided to go linear. Matrices are just stored as linear sets of numbers with information about how to chop them into dimensions, so I did the same. I redefined the training input matrix as a 1×12 array and the outputs as a non transposed 1×4 array. This forced a limit on the data size and volume but I had to remind myself I wasn’t trying to reimplement tensorflow here :).

With the new linear data structure I needed to do the matrix calculations differently – finding the correct index for each loop and then creating a new array with the correct part of the matrix. But it made for quite good lyrics…

thinking takes my senses

my breath is breathless

the dream is lovestruck

until my breath is stronger than my mind

let the story be my senses at my breath

let the prose be my mind at my breath

let the suggestion be the prose of the story

let the dream be with the suggestion

build my breath upAll that was left was testing. I had a 10000 iteration variable, which I immediately set to 1 and managed to spot a lot of typos where I’d made lyrical choices and changed variable names from one line to the next. Then I upped to 10, then 100. At 1000 iterations the web page and the container started to creak. 10000 iterations ran but the browser and my system was not happy. I guess Rockstar code is just too much 😀

So there we have it, rock ballad lyrics running a single neuron machine learning system. I guess I can honestly call myself an ML Rockstar Developer now. Maybe you can too 😉

As usual full code is on the blog GitHub.