The Science and Technology Select Committee here in the UK have launched an inquiry into the use of algorithms in public and business decision making and are asking for written evidence on a number of topics. One of these topics is best-practise in algorithmic decision making and one of the specific points they highlight is whether this can be done in a ‘transparent’ or ‘accountable’ way1. If there was such transparency then the decisions made could be understood and challenged.

It’s an interesting idea. On the surface, it seems reasonable that we should understand the decisions to verify and trust the algorithms, but the practicality of this is where the problem lies.

An algorithm is just a series of instructions to be followed, although Parliament are using it in the very broad sense of any autonomous decision making. An “If-This-Then-That” algorithmic control, even a very complex one, can be put in a form that a non-technical individual can understand, allowing transparency. This allows companies to respond to information requests about decisions made2. While there is merit in making this completely transparent in public sectors, I worry that any transparency legislation in the business sector will cause companies to lose competitive advantage. This is potentially just a wording issue, but needs to be considered.

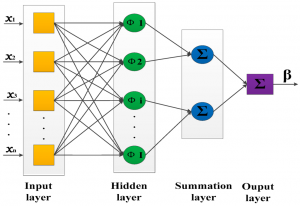

What is more at my level of interest is abstracted algorithmic decisions. I create deep learning systems. I don’t tell the code how to make decisions, I let the system figure it out for itself based on the data I give it. I could show my network architecture, and even the weights on each node, but would this really make the algorithmic decision making transparent?

One of the reasons that Parliament are looking into this is the EU General Data Protection Regulation 2016, which comes into force May 2018. Brexit doesn’t affect this, we will still be full members of the EU at the time this comes into force, we agreed to it before the referendum and all EU regulations will remain UK regulations after we leave unless the UK government repeals them. Also, if you hold data of any EU citizen, this regulation will still affect your company even in the unlikely event that the UK Government overturned it3. In summary, you have the right to know where your data is being used, you have to give consent and you can withdraw that consent at any time4. If your data was used to tune or train any algorithm and you withdraw your consent, what does that mean for the company involved?

While it will be necessary to gain explicit consent to allow your data (images, videos, text, internet behaviour etc) to be used for algorithmic purposes, it is almost certain that this will be included in terms and conditions for services5 as it is easy to argue that they cannot perform the services they need without the ability to improve. We will be giving this permission perhaps without really realising. So when it comes to withdrawing this permission, what will happen?

In all the models that I have worked on, once tuned or trained, there is no link back to the individual: the data has been abstracted into a numeric weight somewhere in the system. I can only see two options here where data use permission is withdrawn:

- Nothing happens: the algorithm continues with the abstracted information, but obviously the raw data will no longer be available if any retraining occurred.

- The algorithm has to be retrained and redeployed on the data set excluding the raw data that is no longer permitted.

I’d like to think that as a society we went with the first option and hope that the EU can clarify this before someone is the subject of a legal test case. The data is so abstracted that it is no longer identifiable. Can you really see companies actually doing the second option? How could it be proved that specific data wasn’t in a model? With the correct amount and type of data, removing a small amount should not affect the algorithm to any noticeable extent.

This leads me back to the algorithmic transparency question. I just don’t see a practical way that any machine learned intelligence could be made transparent to the extent that is being suggested. In my view, we need greater understanding of technology in society as a whole so that we can make good conclusions ourselves as to whether to hand over to algorithmic decisions.

- The government briefing included the quotes. ↩

- I’m using decision in its broadest term here as in “any output by a machine in response to specific data inputs”. ↩

- Data is global – if you deal in it, you need to be aware of the worldwide regulations, not just those of your own country. ↩

- There is a lot more it that that, but those are the relevant points for this discussion. ↩

- Always read T&Cs and EULAs. ↩