This is a summary of day 2 of ReWork Deep Learning summit 2016 that took place in Boston, May 12-13th. If you want to read the summary of day 1 then you can read my notes here.

After an intro by Alejandro Jaimes (who was speaking later in the morning), Nashlie Sephus started the day with a discussion of Partpic – a company using deep learning to remove some of the guesswork from finding replacement parts (billed as “Shazam” for parts). Most spare parts are listed in very large manuals making it very difficult for end users to identify what they need easily, or even for suppliers to ensure the correct parts are being supplied. Partpic has a visual inventory of parts, allowing the user to take a picture of the part1 and their system will identify all the details. Even relatively simple parts the variety is considerable (e.g. bolts2) and so good training data is difficult to get hold of. Also, in any solution such as this, usability is key so the pictures need to be good enough to match. The partpic system checks focus, angles, light levels etc to ensure that the incoming data can be matched accurately against the database. They have developed kiosks for users, where the focal distances and light levels can be controlled and have also built their own imaging devices where they can get 360 degree visibility, and can control all aspects of the image to create data for matching. One of the challenges is that there are a lot of similar looking parts so they use semi-supervised segmentation. They also have to handle where the part is damaged, dirty or rusty as parts to be replaced are often in poor condition.

Second up was Cambran Carter from GumGum who provide image advertising and social listening. Ads are context based and augment the images on which they are overlaid. Using social listening they look for logos and faces and package this back with a combination of image understanding and NLP. Where they can, predictions re added about the sorts of images that may become popular in the near future. One of their clients specifically asked for recognition of images of “bold lips”, and Cambran gave a nice demo of some of the problems they encountered solving this problem. He also gave a really nice example of why academic accuracy and precision doesn’t work well in the real world. With 60% recall and 98% precision, most academics would be fairly happy. However, you pass a million images through the same system, assuming 1% contain what you’re looking for and you only find 60% of those, you’ll get about 6000 true positives. But, the false positives are across the whole space, so 2% of 1million is 20,000, which far outweighs the true recall rate. This is one of the trickier problems to solve with wild data. Cambran also touched on the image safety problem and how filtering out unsafe images can be very difficult as innocent images can often be filtered while very suggestive/unsafe images could get through as they do not match the criteria – this requires further work by the industry.

The third talk was by Mark Hammand from Bonsai3. By focussing on low level data, we are losing the forest for the trees. Databases helped manage data better than having to code solutions for every single problem – this wasn’t as good as hand-tuned, but development was far faster. Bonsai are hoping to do the same thing for deep learning – abstract the problem layer to allow faster and better systems4. What is the equivalent of an entity relation for Deep Learning? Bonsai have split problems into three aspects: Concepts (what are we trying to learn), Curriculum (how do we teach the system and quantify this) and Materials (what data do we use and where does it come from?). This concept is pedagogical programming, staying at the highest level of abstraction to teach systems. They have a new programming language, inkling, which is a cross between Python and SQL. They had a neat demo of a number recognition system being trained through their curriculum process and can replicate the breakout game play demo that Google’s DeepMind had achieved 5. This pedagogical approach can be 3x faster than traditional and also solve problems that other systems could not (e.g. the Bonsai team managed to create a system to play PacMan, which the Deep Mind team could not. A further benefit of this approach is that it doesn’t need structured data, although this does help with early stages.

Adham Ghizali from Imagry was the next talk, with large scale visual understanding for Enterprise. This was a fascinating talk for me as Adham focussed on inspiration from biology, which is where my own thesis was based6. Imagry is putting together a low footprint, low power recognition that will work in real time on a mobile device (rather than relying on expensive round trips to a cloud server). Compressing data 200 times dramatically reduces accuracy, so they took a step back to look at schema definitions – what is the minimum needed to describe the object to be recognised and then augmenting this schema to add new items for recognition. New categories can be added with schemas, so there is no need to retrain the end system. Parallel GPUs were used for training, but the actual system was run on high end mobiles on CPUs. Again, data was an issue, with 5% of the labelling done by mechanical turk, but the remaining data created using an unsupervised labelling algorithm.

Dublin-based start up Aylien was next with Parsa Ghaffri. Language and vision is a hard problem – there are so many aspects to identify. Add to this the scale of content uploaded is more than anyone could consume in their lifetime, we have to make intelligent choices about what is important. Aylien have developed APIs to simplify this as a plug in for other companies. Specifically their focus is on the news and media markets with APIs for news insights and predictions as well as text analysis. This analysis can be at document, aspect or entity level. Their APIs are language agnostic with multichannel word and character input and are backed by Tensorflow. Word embedding lead to dense vectors and in the real world the corpus is uncategorised – Wikipedia is a great example of emergent properties. Word2Vec can’t handle unknown words and it is not always true that the vectors are independent, it also doesn’t scale to new languages. Aylien started creating byte2vec – with less supervision this was an effective supplement to word2vec and gave language independence. As of the summit, Aylien are hoping to open source byte2vec. Currently they only support 6 languages and are hoping to expand this to more complex languages later in the year. Interestingly they started using Theano and then changed to Tensorflow so they could get the full benefit of Google Cloud.

As you can probably imagine, Facebook use deep learning to solve a range of problems. Andrew Tulloch went into detail on some of these features. Facebook’s goal is to make the world more connected – with 414M translations every day, automatically seeing posts in your own language is a great benefit. There are over 1 billion people using facebook each day with an average of 1500 stories per day for each person’s feed. Ranking and search is key to delivering the most relevant content7, including the most relevant advertisements. This needs to be very fast and uses small, efficient deep learning models. Vision is another big area for them – understanding the type, location, activity, people in the image etc. 400M images are loaded to facebook every day, some have tags but this isn’t common on facebook yet. Instagram provides 60M images, but these are mostly well tagged. The imageNet data set consists of clean images, even if they are only part images. In contrast, facebook images are usually very cluttered and active (and not always in focus 😉 ). One of the things that Facebook is looking to do is define interests based on the images you post, e.g. guitars, or enable a photo search. They’ve looked into creating faster convolutional algorithms. NNPack is a cuDNN interface (available on GitHub) that gives a 2x-6x improvement on baseline CPU. The bulk of memory usage is in activations – by running this in a register 50-90% savings can be made. It didn’t pass us by that Andrew was talking about CPUs and not GPUs and when asked about this he noted that a lot of the existing hardware facebook has is CPU optimised8, although training the models is done on GPUs and these are being added into production over time. Facebook was built on open source software so their ethos is to release as much as possible and give back to the community. Their GitHub page is a great place to start.

The next talk showed a more practical application of deep learning rather than just dealing with data presented back on a computer. Joseph Durham from Amazon Robotics (which is the largest non-military robotic fleet in the world with 30000 robots in the field) gave us some history and examples of their system. Originally Kiva systems, they were acquired by Amazon and put to use in the warehouse. The fleet runs from the AWS cloud and consists of the robots (drive units) along with chargers, shelf pods and the whole wireless network. In the warehouse, efficiency is maximised by getting the products to the pickers as quickly as possible. Products are stored on tall shelves that can be transported to the pickers and returned to location so the pickers just stay in one place and the products come to them for packing. The warehouse has QR codes on the floor to help orientate the robots9. When given an instruction to get a specific pod, then can slide under other shelves until they find the right one, pick it up and then navigate through the aisles (street driving) to the human operator, queue until the item has been picked and then return to drop the shelf in a spare place. While this may appear simple there are several interesting problems. Congestion can occur in the streets and highways if the transport is not orchestrated cleanly – just direct routes without wait can cause jams. Parking the pods at the correct distance for efficiency is also an issue – not all products have the same popularity and the pods are sorted as items are picked so that popular items filter to the front and less requested items move closer to the back. Since the popularity of an item changes over time this is not something that can be pre-programmed. Simpler items (robot health, product velocity etc) are easily achievable what is harder is the recognition of objects and packing these into bins (currently done by humans!). Amazon hold a picking challenge which attracts a wide variety of entries from garage robots to very engineered university solutions. Well worth entering if you like combining learning and robotics, although you’ll need to wait until they announce the 2017 challenge. Amazon are also looking to apply this routing solution to drone delivery – 3D is significantly harder!

Jianxing Xiao continued the robot theme. He started with a summary of what $100M humanoid robots can actually do and this is far from the science fiction expectation. Behind the scenes the robots are generally not 100% autonomous and with human mistakes, the robot fails. Therefore, we need to remove the human (and their mistakes) from the equation, including the human designed algorithms that simply do not work in real solutions. For 3D object recognition, we expect a category and a bounding box around the identified object. This generally fails where the object is in an unknown configuration and is particularly relevant for the Amazon picking challenge where objects could be moved as part of the picking process. To get around this, they created multiple images from different viewpoints and combined them with point ot point correspondence – the only real problem here was identification that points were the same without hand labelling. They used deep learning to match geometric key points and used SLAM to generate an “imagined” 3D model that could be compared.

AiCure and Alejandro Jaimes were next. For clinical trials, adherence to the protocol is critical – some people forget, or side effects make them stop taking the medication without contacting the trial. This could result in trials failing and drugs not being approved, or delays in drugs getting approval, or even drugs getting approval that are unsafe . Following protocol is also important for treatment with approved medications – the treatment may not be working due to the patient not taking the medication correctly rather than the drug being ineffective. Non adherence to trials costs in the range of $100-$300 billion per year with a range of 43%-78% adherence in trials. AiCure are looking to derisk clinical trials by identifying that the medication is in the mouth and that subsequently the mouth is empty. With the data going straight to the platfor4m, patients or trialists can be contacted if doses are missed, to allow better service. This can be achieved without any changes to the manufacturing process. The solution works with smartphones and is scalable and very simple, working even without signal, transmitting the data when a connection is available. Machine Learning is used for the face recognition, identification of the medication, confirming ingestion and anti-fraud detection. This prevents the wrong people from taking the medication, the wrong pills being ingested (e.g. aspirin rather than the trial medication) and also catches off screen spitting. Traditional protocol adherence was done through counting the blister packs and blood draws, giving discrete samples with time consuming tests. The AiCure solution gives continuous data allowing those who are not adhering in trials to be replaced quickly and individuals in treatment to get the extra support they need without waiting for a regular doctor consultation. When the solution was itself trailed, the perception of patients was generally positive and, without understanding the technology they found it very easy to use. The solution could also catch dual enrolment in trials that they may not have disclosed. The deep learning is at the intersection of the data and human aspects and the user experience to create an intelligent medical assistant.

David Klein of Conservation Metrics gave a great talk on some of the applications of deep learning to conservation problems. There are millions of species on the plnet and lots of ecosystems are impacted by the things humans do. Current extinctions are at 1000 times the natural rate. Monitoring and data is available from multiple sources and the company is trying to remove the complexity and cost from environmental research by leveraging deep learning to analyse biodiversity data. Deep learning is a great fit for these problems which can be pattern recognition, vision, sound or multiple tasks. David gave some great examples of filtering out sounds to listen for specific birds, localise species in infrared images and behavioural analysis. Because there were so many great examples of conservation, he didn’t go into detail on any of the deep learning side, but their projects are truly interesting and I would suggest having a good look at their website.

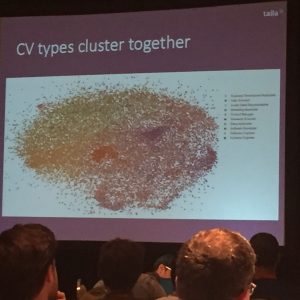

One of my continuing annoyances when recruiting is that agencies often use naïve keyword searches to find candidates, rather than a true understanding of experience or fit. Byran Galbraith from Talla presented a new way of identifying candidates with CV2Vec. They had a corpus of 30000 CVS and were interested in 10 job titles. They extracted the information and tagged it and applied doc2vec. This didn’t give any objective results and with the noisy data it was hard to tune hyperparameters. They wanted to find a candidate that “looked like” a second candidate. With their new model, they found some interesting connections. Boeing engineers often went on to be aerospace engineers as a trivial example. After using their model, they projected the CVs onto a 2D map: researchers were very clustered, general engineers were less clustered. They also tried to predict the candidates next job based on previous job titles. They used RNN with LSTM and this seemed to work. There were a lot of future steps the company has not started on yet: fraud detection, candidate ranking, correlation with GitHub etc. In assume there were so many questions as most people in the room were recruiting and struggling to find good people!

To wrap up the second day there was a short panel. First up was the discussion of deep learning in healthcare. In the US, the third largest cause of death is “accidents” with 210k – 400k people a year. Sadly, because of how the US healthcare system works, data is captured for legal protection and financial reimbursement over continuity of healthcare. The data is not quantifiable or reliable and is generally unstructured text. There are fundamental problems in these notes e.g. the illness can be 80% incorrect – to get reimbursement for a test procedure, generally you must get diagnosed with the condition the test was for10. It seems there is a long way to go before there will be the open honest sharing of accurate data that the field needs to truly advance. The panel’s assessment was that there would never be an AI doctor11 but that the AI could give a probabilistic assessment based on the data presented. There is a reticence of technology within medicine at present (maybe this is just the USA?). The panel then moved on to manufacturing and robots. The technical cost of innovation on the factory floor is fairly steep so there needs to be a very high return in order for the investment to be made. AI needs to be simple to use and fast. There is no appetite for training once deployed and focus needs to be on the problems and how to solve them rather than skeuomorphic design. A person should be able to show a robot what to do and it should learns with no complex programming, e.g. demonstrating picking up an object. At least, that’s the dream.

The conference closed out with a really nice appreciation to all of us working in this field – the advances are huge year on year.

“Great job everyone!”

- with a penny for scale ↩

- consider head diameter and thickness, length and diameter of smooth part, length and diameter of threaded part, pitch and angle of thread, material and finish ↩

- who I spoke to in one of the networking sessions – very interesting concept ↩

- It is possible that this has already been done with the frameworks that are freely available and do we need a further level of abstraction? ↩

- I’ll add the reference here once I’ve found it ↩

- My doctorate was on computation models of invertebrate neurons exhibiting cyclic patterns and reinforcement learning. I think there are still a lot of biological approaches that we could apply to deep learning. ↩

- I hate this “top stories” feature personally and have seen this creep into twitter too I wish Facebook would remember my preference for most recent… ↩

- nice to know that even facebook has operational issues that prevent them from using the best solutions! ↩

- which look like heavy duty Roombas ↩

- This was a serious WTF moment for me. I love the fact the UK has the NHS – it’s not perfect but you don’t have to get your doctor to fraudulently diagnose a condition to avoid you going bankrupt…. ↩

- Who would they sue? the company, the programmer??? ↩