If you’ve been following this blog you’ll know that there have been great advances in the past few years with artificial image generation, to the stage where having a picture of something does not necessarily mean that it is real. Image advances are easy to talk about, as there’s something tangible to show, but there have been similar large leaps forward in other areas, particularly in voice synthesis and handwriting.

Voice simulation has been around for a while and in the How to make a Human documentary this was used to create an artificial Gemma Chan with the aim to fool journalists. It sounded pretty authentic, although for safety it was passed through a Skype call so any aberrations could be explained by connectivity1. However, Canadian start up Lyrebird claim to be able to reproduce anything given just a minute of sample vocals. Not only can what is being said be adjusted, but also the emotion with which it is said. Their results are impressive although still sound a little artificial right now. It won’t be long before those imperfections are removed (or at least become an option). This is the stuff of Sci-Fi. Imagine that things you have never said being played out in court in your own voice alongside artificially created images showing something you never did. While this technology could be used to give a natural voice back to throat cancer sufferers or allow your emails to be read out to your child while you’re away, there are a lot more negative opportunities for this in the near future than positive that I can think of2.

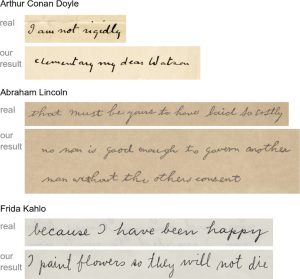

A similar step forward occurred last year with “my text in your handwriting” from a team at UCL. So we all type and text now, but our signatures still carry weight and handwritten letters, notes, and essays are still used as proof that they were written by a specific individual. It takes a lot of skill to forge handwriting to a point where it can’t be detected. Not any more….

So, we can’t trust images, audio or even scans of written evidence as this is all becoming too easy to fake. There’s a legal issue here that does need resolving quickly. Our legal systems3 evolve slowly. Evidence is checked for being tampered but are not designed for completely artificially generated situations. This is something that should be considered, debated and tested within the legal community.

We are all going to have to readjust how we interact with the evidence around us in the very near future. From a teacher assessing homework, to a journalist deciding whether the photos they are about to print are genuine, to a judge deciding on a verdict we’re going to have to keep a healthy sense of scepticism to determine the genuine from the artificial. AI is giving us some amazing tools to make our lives easier, but we need to adapt as they become part of our every day experiences.