So, day one of the ReWork Deep Learning Summit Boston 2015 is over. A lot of interesting talks and demonstrations all round. All talks were recorded so I will update this post as they become available with the links to wherever the recordings are posted – I know I’ll be rewatching them.

Following a brief introduction the day kicked off with a presentation from Christian Szegedy of Google looking at the deep learning they had set up to analyse YouTube videos. They’d taken the traditional networks used in Google and made them smaller, discovering that an architecture with several layers of small networks was more computationally efficient that larger ones, with a 5 level (inception-5) most efficient. Several papers were referenced, which I’ll need to look up later, but the results looked interesting.

The second talk was from Alejandro Jaimes at Yahoo pondering whether creativity could be learned. When a user searches for text, they find the link they’re interested in and click on it. But with images searches, users tend to browse lots of images looking for the right picture, which is a different interaction pattern for products. Yahoo were particularly looking at what makes a good portrait picture for celebrities so that these could be given priority. While there are some generalities for this (lighting, direction of face etc) there are also some specifics (the Queen usually wears a hat, racing drivers wear caps) and also a time component (Katy Perry should show her latest hair colour, sports players should be in their latest strips to avoid being discarded) which makes the problem more complex.

Yahoo were also looking at videos and whether it was possible to define creativity in videos. They used a human judged video set for training looking at audio, visual, features, scene content etc and used DL to build reasoning engines to predict how creative the videos were. Using this analysis they were able to create summaries of the most interesting parts of long videos and also animated gifs. This left the entire room with Taylor Swift’s Bad Blood, used as the example video for the demonstration, as an earworm!

Next up was Kevin Murphy (yes that Kevin Murphy of Machine Learning although I didn’t put 2 and 2 together at the time!), another Google employee looking at semantic image segmentation. Current techniques make tagging an image content easy but at the expense of further information – especially when objects are close together – the image may show multiple chairs but be tagged “chair”. This is down to mapping the image as a probability density of part of the image being the object in question, blurring the edges. By adding in edge detection, the probability can be bounded within the confines of the object shape e.g. we don’t average over sky and plane pixels. They also added a rule that a proportion of the pixels must be the object in question, not just one, which is a usual minimum. The group hadn’t looked into sub parts of bounding boxes e.g. to identify arms rather than just a body, but this is a natural extension of the technology.

Using commonly labelled images, this gave some interesting result, particularly of a rider on a horse in water, where some of the edges would be difficult to detect. There were challenges, particularly if objects in the image were unlabelled (e.g. a tree in the background). With this technique they were building a food app to identify components of a meal to determine calories. It didn’t matter if the result wasn’t correct as users of this sort of app are good at adding and correcting – for these sort of apps they are self motivated to provide the corrective feedback. Knowing what objects are commonly paired, even if the app didn’t see an object (e.g. cheese on a burger) it could prompt for these items. I can see this being taken up by a lot of people – anything that takes the effort out of tracking calories accurately will do well.

Andrew Ng from Baidu was next up. I’d been looking forward to hearing one of the main people in DL speak, although I was a little disappointed. There was no real content to the talk, given in a chat format rather than a presentation. He did allude to some interesting work they were doing where they were training to get an answer to a question when presented with a picture: on showing a picture of a bus and the question “what colour is the bus?” being able to answer “red”. There was no concept yet of a “killer app” and wasn’t sure if we were ready to define this yet. He then went on to talk about speech recognition and the difficulties with speech recognition cross language. While this was a great statement of the problem, there was a lack of ideas presented, possibly due to embargoes by Baidu (?). What was particularly interesting was that Andrew Ng was clear that he hadn’t anticipated the progress in image and speech recognition in the past 2 years, but that we shouldn’t be worrying about where AI would take us in the future. There was a question asking why, if he couldn’t predict 2 years into the future, how could he make statements further ahead that other things would or would not happen in the near future? This wasn’t answered satisfactorily for me.

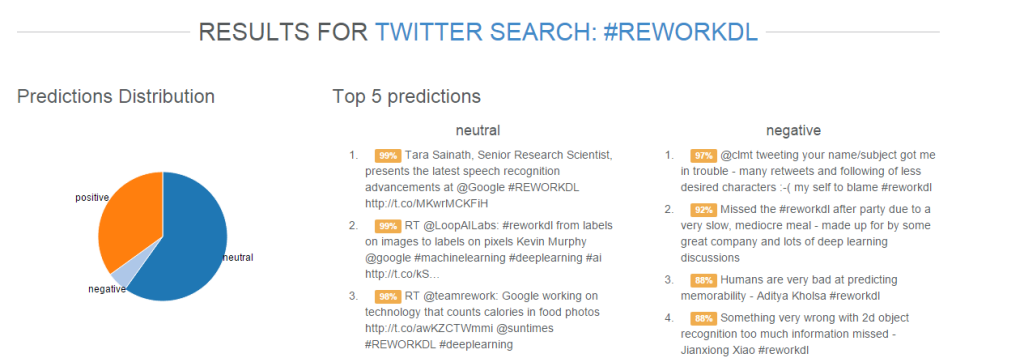

After a much needed caffeine break we were back in to a talk by Richard Socher, co-founder of MetaMind – who were also exhibiting. Richard noted that while we communicate in natural language, we experience the world visually and combining the two is important to gain understanding. This is not a simple problem. The order of words is important for context, especially with single (or double) negatives e.g. “not a good movie” or “the first 3 minutes were boring but by the end I enjoyed the talk” – this is more complicated than simply a bag-of-words analysis and he demonstrated one of the demos available on their site: the twitter sentiment analyser.

He also talked about how this type of text analysis could help with solving problems like Jeopardy and how they’d trained the network using facts, sorted into 100 dimensional entity vectors. It was interesting that similar facts grouped together in the vector space (e.g. presidents). While not perfect, the system could beat college students. While IBM’s Watson took years with 100s of people, the Metamind demo was done by a single student in one year. While impressive, this does underlie the immense steps forward that the Deep Learning community are taking. He ended with a demo of image engagement heat maps and determining whether an image was engaging or not (along with the tweet text) so this could be checked before posting, and a teaser that more things were coming in the next few weeks. I’ll be keeping an eye out for this.

Data is always an issue for deep learning and companies such as eBay have a lot of data. Marc Delingat and Hassan Sawaf told us that they were looking at vast improvements to user experience by allowing local language searching and displaying results translated on the fly. As anyone who has ever used eBay knows, listings have their own peculiar language and odd words. They have applied deep learning to the translation problem and also auto tagging images to allow listings to appear even if the searched term is not explicitly in the listing title. The categories that an item is posted in can help shortcut some of the process although some niche items are very hard to classify (rare Magic the Gathering cards for example – where usually just the card name is posted and the image is a close up of the card, which doesn’t classify easily).

After lunch, Daniel McDuff from Affectiva showed us what can be done monitoring people’s reactions while viewing content – either as part of marketing research or as an opt in monitor. It occurred to me that I have done some market research where I allowed my webcam to watch my face and I spent the rest of the talk wondering if I was in the database and if so, hoping fervently I wouldn’t see my face appear on a slide 😉 (spoiler – it didn’t). There is a difference between response data that has come from a survey (and been through a cognitive filter) and what our faces betray unconsciously, so much more honest result can be obtained by facial recognition. They found that amount of expression varies with age, gender and culture with women being on average 29% more expressive than men, although the difference was the least in the UK (are us Brits more comfortable expressing ourselves than other countries?). Once trained, they could look at the results for adverts, TV shows and political content (the latter being very good for promoting negative emotions). While this was going on, I had a side conversation on Twitter about applying this technology in the gaming industry – having the game adjust to your engagement and the in game AI characters responding to how you look (add in an occulous for immersion and we’re not far off from total immersion video games 🙂 ). I digress.

Daniel was keen to state that they were not currently looking at deceptive emotion (fake smiles etc) – the company was about making experiences better based on how we respond and we should have the right to hide how we’re feeling from AI around us. You may want to have a think about you feel about that for later on in this post. He accepted that there was a bias in the data set of people who opted in to monitoring as they would be a certain sort of person, although with the market research they can define the segmentation. It may also be important to monitor in more natural environments, rather than just in front of a machine, but this is a separate problem.

Charlie Tang from University of Toronto split the two emotion talks for a look at some of the amazing visual recognition techniques he’s been working on. Without getting too far into the detail, he had 3 different methods:

- Robust Boltzman machine: this took unlabelled data for face recognition. As you add occlusions, the performance degrades. One solution is to “clamp” the clean area of the image and use that for matching. The system had no knowledge of the occluded pixels, yet was able to identify the clean area for matching. This also worked when the face was occluded with noise rather than a solid block. This has great applications for security ID where someone may have sunglasses, a hat, or be deliberately obscuring part of their face.

- Deep Lambertian: Matching can become difficult if the light levels between the source image and the test image are different. The tone of a pixel in an image will depend on the light levels, angle of reflection and the reflectiveness of the surface. From a single image, this technique allowed Tang to determine how the image would look under multiple different lighting conditions so that the source image can be modified to match the current test conditions before attempting a match.

- Generative model: Finding the area of interest in an image is difficult, whether this is a face or anything else. While a standard Gaussian may find this, it can also get stuck at local optimums, even if this is not right. Using a convolutional neural network, the area can shift to a new location and then reoptimise until the correct location was found.

The three techniques together could give huge accuracy improvements for companies working in face recognition, although similar methods could be applied to any deep learning project to remove noise, resolve attenuation and pinpoint key points of interest.

We then moved onto the second emotion recognition talk from Modar Alaoui at Eyeris. For their training, they’d segmented the data into 5 ethnic groups, 4 age groups, 2 genders, 4 lighting conditions and 4 head poses, providing a lot of different combinations. Their software is trained to read microexpressions and Modar was very clear that this was the direction he felt the technology should be going – removing the ability for us to lie to technology so that they get the correct result rather then the result we want they to get. In addition, Modar wanted their technology embedded in “every device with a camera”. This did raise a whole host of privacy issues and I learned the legalities of this:

- If you are storing images/video then it has to be OptIn data

- but you can process live stream and keep the analysis (and act on it) as long as you don’t store the actual data.

Modar demonstrated the software, showing the real time changes in which of the seven standard emotions was being demonstrated.

The fourth talk of this section was cancelled, so Daniel and Modar did an impromptu panel session on emotional recognition. The discussion in the room was very focused on privacy, and this was polarised by the views on stage: Daniel very keen to ensure that we respected privacy and were allowed to hide our emotions, Modar equally keen that the technology should be able to tell if we are lying or hiding something and that there should be passive monitoring. I can see this becoming a big issue and encourage you to think about the following scenarios:

- You buy a new tablet with an inbuilt camera – the T&Cs state that this will be on constantly and monitoring you. Would you use it (would you even read those T&Cs)? If you were happy with this then would you remember to tell someone before you lent them the device?

- If you got in a car, would you remember to ask the driver every time if they had opted in to be monitored and if this would affect how the car responds?

- Is it inevitable that we will all be constantly monitored in the very near future and our data used “against” us?

Lots to think about as we went into a break.

The final session of the day started with Bart Peintner of Loop AI Labs, also talking about understanding text. There is a very large set of test data but it is hard to understand. Many examples are specific to their industry so domain specific models are required in addition to consistency and structure in the test set. In order to get the meaning of a specific word, you really have to dig into the text and get the structure. Words can have multiple meanings in context and also nuances in context. It is also common for some people to use similar words because they don’t care about the distinction, whereas an expert would be specific (“memory” to mean “HDD” or “RAM” interchangeably). Bart demonstrated how they had applied this to film reviews, using deep learning for the phrase embeddings and created word relationships and then cluster analysis to produce categorisations.

There was then a panel session on deep learning in the real world. This was a little odd as of the three panelists, 2 weren’t using deep learning at all and the third was still at the “in progress stage”. Where there were some salient points about when it was relevant to use deep learning and not, on the whole this felt like a missed opportunity to get under the hood of using DL or not for real problems.

Next up was Tara Sainath, another Google employee, presenting the latest advancements in speech recognition. Their task was, given a set of acoustic observations, to predict sub units of words. Since there were far too many words to model they focused on the phonics. The previous set up used traditional algorithms and looked at 10-20k phonic states – could they do better? By combining architectures they created a 2 channel neural network looking at overlapping 25 ms windows of sound with a 10 ms shift. The effects of this move allowed far fewer phonic states to be needed and filtered out a lot of the noise from the samples. This has given Google a new model to work from, although the results were marginal over the old model. I suspect that these will improve very quickly.

The last talk in this section was by Clement Farabet from Twitter – their aim is to understand people from what they post and their relationships. Topics appear and disappear on twitter very quickly. Some tweets are impossible to get context without seeing an accompanying image. They also wanted to look at NSFW filtering, which is more difficult than might be expected. Only so much can be done with classical techniques (we learned early in the day that “classical” actually means 2 years ago or more 🙂 ). With some images, they get reposted many times, so the system needs to recognise copies, even if they have been altered. Clement showed an example of the Ellen selfie, along with some of the photoshopped versions that spawned from it, which were matched.

The relationship between the raw data and the prediction of interest in the tweet and its categorisation is highly non-linear. There are multiple problems and these can be solved with different tools. The beauty of Twitter is the amount of data that they have access to for learning – it’s something those of us in smaller companies can only dream of!

So that was day 1, and this is only a high level summary of the notes I took during the sessions. There are a lot of interesting avenues I need to investigate, and I suspect that the ideal will be in combining multiple approaches to achieve what I need. A lot of brain food and only a few hours to let it all sink in (especially for someone still slightly jetlagged!) before day 2 and more talks.

As one of the panelists on Day One, I very much appreciate that you took the time to record and share your thoughts. However, in my opinion it appears that you somewhat half listened, and then “shared” accordingly. Please be mindful that this can be both inaccurate and harmful. I assume you were talking about me, when you mentioned “in progress”. Please know that I have a long and in-depth career in technology — I have worked with and for many large corporations and startups. My expertise and opinion was not in relation to my current stealth project, which you may know is a rather common status, but in my overall opinion on current use in today’s world. Although, I get your point (that the 3 of us mostly agreeing that it’s early for deep learning to be mainstream in regards to enterprise adoption, was perhaps somewhat redundant) the fact that we did and came from quite different perspectives underlines the point. Also, please know that I personally heard from many attendees that were very happy to hear about some of the business concerns and realities surrounding deep learning and wished that we had gone on longer. I thought overall it was a good conference and I am very excited and bullish on the promise of deep learning and look forward to participating in the many exciting advances that it will bring.

hi Lauren, Thanks for reading! Whilst my primary focus was the technological information of the day, I agree I skimmed over the panel session somewhat in my summary above and could have expanded more on the points made by yourself and the other two panelists. At the time I tweeted the excellent point you made that there are “huge opportunities in deep learning but to translate them to corporate spend is a problem”, along with other comments made by Claudia that “not all problems need deep learning”. I would have loved to have seen a fourth panelist with a success story to aid the discussion and their absence is probably indicative of where the industry is at the moment.

This is indeed an exciting time and, as we discussed at the conference, it is a shame that companies with good ideas are not succeeding because they cannot balance their spend.