If you follow my posts on AI (here and on other sites) then you’ll know that I’m a big believer on ensuring that AI models are thoroughly tested and that their accuracy, precision and recall are clearly identified. Indeed, my submission to the Science and Technology select committee earlier this year highlighted this need, even though the algorithms themselves may never be transparent. It was not a surprise in the slightest that a paper has been released on tricking “black box” commercial AI into misclassification with minimal effort.

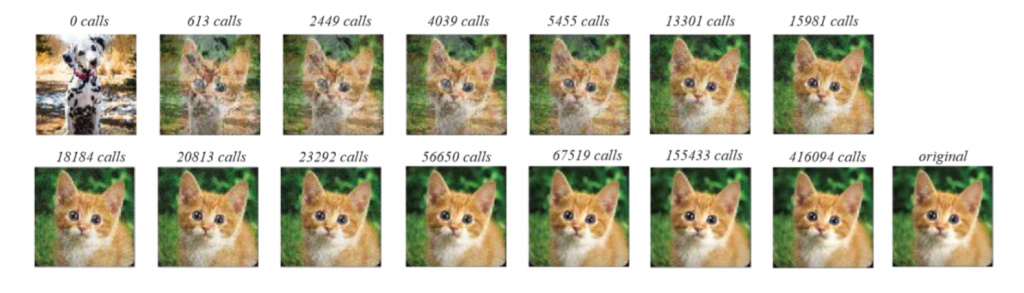

Brendel at al1 have developed a decision based attack called a boundary attack – they seek to create an adversarial example such that it is misclassified while being as close to the correctly classified image as possible in either an untargeted or targeted approach2. There are some very interesting results against two commercially available APIs (celebrities and logos) as well as against models created from CIFAR and MNIST. What is noteworthy is that Brendel shows that these APIs can be fooled by images that are mostly (human) visually indistinguishable from the original. This is a huge problem as we move into an era with greater and greater reliance on AI algorithms. We want algorithms that are robust.

Whether obvious or not, the ability to fool AI by a few pixels shows we have a way to go even in state of the art applications. Humans3 are not fooled so easily. The level of rigor we apply to the creation of artificial intelligence needs to increase in proportion to the impact of the decisions of those algorithms. We cannot allow our self driving vehicles to be fooled by imperfect road signs, the legal system to be reliant on automatic decisions that are influenced by irrelevant parameters4, or voice recognition on our bank accounts that can be easily imitated.

To be a scientist, you have the responsibility of thoroughly testing any assertion you make. This means doing everything you can to break or disprove your discovery. Just saving a percentage of your input data for post-training classification is not sufficient. If you do the bare minimum and then this is released into the wild you will be responsible for the consequences. If you’re happy with that then please for the sake of the industry change your job title to “Data Ignorist” so we know what your testing ethos is.

- DECISION-BASED ADVERSARIAL ATTACKS: RELIABLE ATTACKS AGAINST BLACK-BOX MACHINE LEARNING MODELS, Under review as a conference paper at ICLR 2018, available at: https://arxiv.org/pdf/1712.04248.pdf ↩

- Untargeted not caring what the misclassification is and targeted approach trying to retain a specific misclassification ↩

- Apart from very small children – I regularly use my daughter as an example of this where she had only seen black and white cows and brown horses – the first time she saw a black and white horse at age 3 she was adamant it was a cow 🙂 ↩

- In fairness, this study was looking at false positive bias when the system had been tuned to minimise false negative bias and you can’t have both with unbalanced populations. I go into more detail in this in my presentation on legal bias if you want to read more. ↩