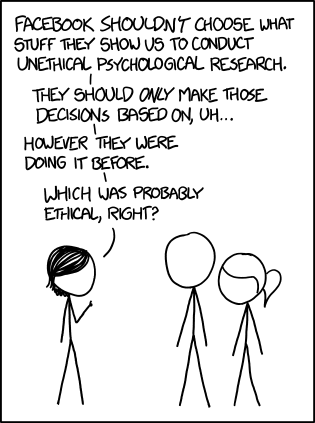

By now, the majority of people who keep up with the news will have heard of Cambridge Analytica, the whistle blower Christopher Wylie, and the news surrounding the harvesting of Facebook data and micro targeting, along with accusations of potentially illegal activity. In amongst all of this news I’ve also seen articles that this is the “awakening ” moment for ethics and morals AI and data science in general. The point where practitioners realise the impact of their work.

“Now I am become Death, the destroyer of worlds”, Oppenheimer

Continue reading Cambridge Analytica: not AI’s ethics awakening